Introducing the Rfacebook package

My new R package Rfacebook is now available on CRAN. This package is intended to provide access to the Facebook Graph API within R. It includes a series of functions that allow R users to extract their private information, search for public Facebook posts that mention specific keywords, capture data from Facebook pages, and update their Facebook status.

In this post I describe and illustrate the current functions included in the package. Used in combination with other R packages, Rfacebook can be a very powerful tool for researchers interested in analyzing social media data. For any question, or to report any bug, you can contact me at pablo.barbera[at]nyu.edu or via twitter (@p_barbera), or on the comments section below.

Installation and authentication

Rfacebook can be installed directly from CRAN, but the most updated version will always be on GitHub. The code below shows how to install from both sources.

install.packages("Rfacebook") # from CRAN

library(devtools)

install_github("Rfacebook", "pablobarbera", subdir = "Rfacebook") # from GitHub

Most API requests require the use of an access token. There are two ways of making authenticated requests with Rfacebook. One option is to generate a temporary token on the Graph API Explorer. Then just copy and paste the code into the R console and save it as a string vector to be passed as an argument to any Rfacebook function, as I show below. However, note that this access token will only be valid for two hours. It is possible to generate a 'long-lived' token (valid for two months) using the fbOAuth function, but the process is a bit more complicated. For a step-by-step tutorial, check this fantastic blog post by JulianHi. (Also note that the authentication process does not work correctly from RStudio, but you can create your long-lived token in R and then open it from RStudio to continue your session.)

library(Rfacebook)

# token generated here: https://developers.facebook.com/tools/explorer

token <- "XXXXXXXXXXXXXX"

me <- getUsers("pablobarbera", token, private_info = TRUE)

me$name # my name

## [1] "Pablo Barberá"

me$hometown # my hometown

## [1] "Cáceres, Spain"

The example above shows how to retrieve information about a Facebook user. Note that this can be applied to any user (or vector of users), and that both user screen names or IDs can be used as arguments. Private information will be, of course, returned only for friends and if the token has been given permission to access such data.

Analyzing your network of friends

The function getFriends allows the user to capture information about his/her Facebook friends. Since user IDs are assigned in consecutive order, it's possible to find out which of our friends was the first one to open a Facebook account. (In my case, one of my friends is user ~35,000 – he was studying in Harvard around the time Facebook was created.)

my_friends <- getFriends(token, simplify = TRUE)

head(my_friends$id, n = 1) # get lowest user ID

## [1] "35XXX"

To access additional information about a list of friends (or any other user), you can use the getUsers function, which will return a data frame with users' Facebook data. Some of the variables that are available for all users are: gender, language, and country. It is also possible to obtain relationship status, hometown, birthday, and location for our friends if we set private_info=TRUE.

A quick analysis of my Facebook friends (see below) indicates that 65% of them are male, more than half of them live in the US and use Facebook in English, and only 2 indicate that their relationship status “is complicated”. (Note that language and country are extracted from the locale codes.)

my_friends_info <- getUsers(my_friends$id, token, private_info = TRUE)

table(my_friends_info$gender) # gender

## female male

## 155 292

table(substr(my_friends_info$locale, 1, 2)) # language

## ca da de en es fi fr gl it nb nl pl pt ru tr

## 38 2 10 302 82 1 6 1 11 1 1 1 2 1 2

table(substr(my_friends_info$locale, 4, 5)) # country

## BR DE DK ES FI FR GB IT LA NL NO PL PT RU TR US

## 1 10 2 82 1 6 79 11 39 1 1 1 1 1 2 223

table(my_friends_info$relationship_status)["It's complicated"] # relationship status

## It's complicated

## 2

Finally, the function getNetwork extracts a list of all the mutual friendships among the user friends, which can be then used to analyze and visualize a Facebook ego network. The first step is to use the getNetwork function. If the format option is set equal to edgelist, it will return a list of all the edges of that network. If format=adj.matrix, then it will return an adjacency matrix of dimensions (n x n), with n being the number of friends, and 0 or 1 indicating whether the user in row 'i' is also friends with user in column 'j'.

mat <- getNetwork(token, format = "adj.matrix")

|=================================================================| 100%

dim(mat)

## [1] 462 462

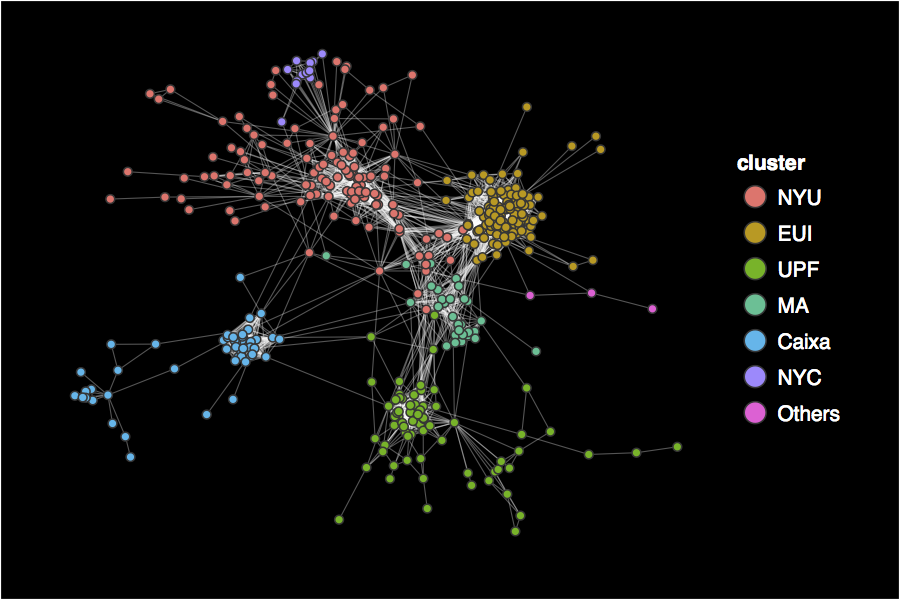

This adjacency matrix can then be converted into an igraph object, which facilitates the task of computing measures of centrality, discovering communities, or visualizing the structure of the network. As an illustration, the plot below displays my Facebook ego network, where the colors represent clusters discovered with a community detection algorithm, which clearly overlap with membership in offline communities. This was one of the examples from my workshop on data visualization with R and ggplot2. The code to replicate it with your own Facebook data is available here. David Smith has also posted code to generate a similar network plot.

Searching public Facebook posts

Rfacebook can also be used to collect public Facebook posts that mention a given keyword using the searchFacebook function. As shown in the example below, it is possible to search within specific a time range with the until and since options. If they are left empty, the most recent public status updates will be returned. The n argument specifies the maximum number of posts to capture, as long as there are enough posts that contain the keyword. Due to the limitations of the API, it is only possible to search for a single keyword, and the results will not include messages that are more than around two weeks old.

posts <- searchFacebook(string = "upworthy", token, n = 500,

since = "25 november 2013 00:00", until = "25 november 2013 23:59")

## 148 posts

posts[which.max(posts$likes_count), ]

## from_id from_name message

## 87 354522044588660 Upworthy Is this real life?!?!? - Adam Mordecai

## created_time type link

## 87 2013-11-25T17:10:47+0000 link http://u.pw/1gbVzsV

## id likes_count comments_count shares_count

## 87 354522044588660_669076639799864 8174 559 2701

The code above shows which public Facebook post mentioning the website “upworthy” and published on November 25th received the highest number of likes. It also illustrates the type of information that is returned for each post: the name and user ID of who posted it, the text of the status update (“message”), a timestamp, the type of post (link, status, photo or video), the URL of the link, the ID of the post, and the counts of likes, comments, and shares. Going to facebook.com and pasting the ID of the post after the slash shows that the most popular public Facebook post was this one.

Analyzing data from a Facebook page

Facebook pages are probably the best source of information about how individuals use this social networking site, since all posts, likes, and comments can be collected combining the getPage and getPost functions. For example, assume that we're interested in learning about how the Facebook page Humans of New York has become popular, and what type of audience it has. The first step would be to retrieve a data frame with information about all its posts using the code below. To make sure I collect every single post, I set n to a very high number, and the function will stop automatically when it reaches the total number of available posts (3,674).

page <- getPage("humansofnewyork", token, n = 5000)

## 100 posts (...) 3674 posts

page[which.max(page$likes_count), ]

## from_id from_name

## 1915 102099916530784 Humans of New York

message

## 1915 Today I met an NYU student named Stella. I took a photo of her. (...)

## created_time type

## 1915 2012-10-19T00:27:36+0000 photo

## link

## 1915 https://www.facebook.com/photo.php?fbid=375691212504985&set=a.102107073196735.4429.102099916530784&type=1&relevant_count=1

## id likes_count comments_count

## 1915 102099916530784_375691225838317 894583 117337

## shares_count

## 1915 60528

The most popular post ever received almost 900,000 likes and 120,000 comments, and was shared over 60,000 times. As we can see, the variables returned for each post are the same as when we search for Facebook posts: information about the content of the post, its author, and its popularity and reach. Using this data frame, it is relatively straightforward to visualize how the popularity of Humans of New York has grown exponentially over time. The code below illustrates how to aggregate the metrics by month in order to compute the median count of likes/comments/shares per post: for example, in November 2013 the average post received around 40,000 likes.

## convert Facebook date format to R date format

format.facebook.date <- function(datestring) {

date <- as.POSIXct(datestring, format = "%Y-%m-%dT%H:%M:%S+0000", tz = "GMT")

}

## aggregate metric counts over month

aggregate.metric <- function(metric) {

m <- aggregate(page[[paste0(metric, "_count")]], list(month = page$month),

mean)

m$month <- as.Date(paste0(m$month, "-15"))

m$metric <- metric

return(m)

}

# create data frame with average metric counts per month

page$datetime <- format.facebook.date(page$created_time)

page$month <- format(page$datetime, "%Y-%m")

df.list <- lapply(c("likes", "comments", "shares"), aggregate.metric)

df <- do.call(rbind, df.list)

# visualize evolution in metric

library(ggplot2)

library(scales)

ggplot(df, aes(x = month, y = x, group = metric)) + geom_line(aes(color = metric)) +

scale_x_date(breaks = "years", labels = date_format("%Y")) + scale_y_log10("Average count per post",

breaks = c(10, 100, 1000, 10000, 50000)) + theme_bw() + theme(axis.title.x = element_blank())

To retrieve more information about each individual post, you can use the getPost function, which will return the same variables as above, as well as a list of comments and likes. Continuing with my example, the code below shows how to collect a list of 1,000 users who liked the most recent post, for whom we will also gather information in order to analyze the audience of this page in terms of gender, language, and country.

post_id <- head(page$id, n = 1) ## ID of most recent post

post <- getPost(post_id, token, n = 1000, likes = TRUE, comments = FALSE)

users <- getUsers(post$likes$from_id, token)

## 500 users -- 1000 users --

table(users$gender) # gender

## female male

## 784 209

table(substr(users$locale, 4, 5)) # country

## AZ BE BG BR CA CZ DE DK EE ES FR GB GR HR HU IL IR IT

## 1 1 1 8 1 2 8 8 1 4 5 141 1 3 1 1 1 9

## LA LT NL PI PL PT RO RS RU SE SI SK TH US VA

## 8 3 5 5 5 2 1 1 8 2 1 1 1 758 1

table(substr(users$locale, 1, 2)) # language

## az bg cs da de el en es et fa fr he hr hu it la lt nl

## 1 1 2 8 8 1 904 12 1 1 6 1 3 1 9 1 3 6

## pl pt ro ru sk sl sr sv th

## 5 10 1 8 1 1 1 2 1

Updating your Facebook status from R

Finally, yes:

updateStatus("You can also update your Facebook status from R", token)

## Success! Link to status update:

## http://www.facebook.com/557698085_10152090718708086

However, to make this work you will need to get a long-lived OAuth token first, setting private_info=TRUE in the fbOAUth function.

Concluding...

I hope this package is useful for R users interested in working with social media data. Future releases of the package will include additional functions to cover other aspects of the API. My plan is to keep the GitHub version up to date fixing any possible bugs, and to release only major versions to CRAN.

You can contact me at pablo.barbera[at]nyu.edu or via twitter (@p_barbera) for any question or suggestion you might have, or to report any bugs in the code.

comments powered by Disqus